In 1985 I bought an Apple Macintosh computer. It cost $3,500 ($7,000 in today’s dollars). Soon after, Apple and other companies started selling external hard-disk drives for the Mac. They, too, were expensive. But in 1986 or ’87 the price for a hard disk came down to an “affordable” $2,000, and I and many Mac owners were tempted. In the mid-1980s, a 20-megabyte (MB) hard drive cost $2,000 ($4,000 in today’s dollars). That’s $200 per MB (in today’s dollars).

Fast forward to 2018. On my way home last week I stopped by an office-supply store and paid $139 for a 4 terabyte (TB) hard drive. That’s $34 per TB.

What would that 4 TB hard drive have cost me if prices had remained the same as in the 1980s? Well, one terabyte is equal to a million megabytes. So, that 4 TB drive contains 4 million MBs. At $200 per MB (the 1980s price) the hard drive I picked up from Staples would have cost me $800 million dollars—not much under a billion once I paid sales taxes. But it didn’t cost that: it was just $139. Hard disk storage capacity has become millions of times cheaper in just over a generation. Or, to put it another way, for the same money I can buy millions of times more storage.

I can reprise these same cost reductions, focusing on computer memory rather than hard disk capacity. My 1979 Apple II had 16 kilobytes of memory. My recently purchased Lenovo laptop has 16 gigabytes—a million times more. Yet my new laptop cost a fraction of the inflation-adjusted prices of that Apple II. Computer memory is millions of times cheaper. The same is true of processing power—the amount of raw computation you can buy for a dollar.

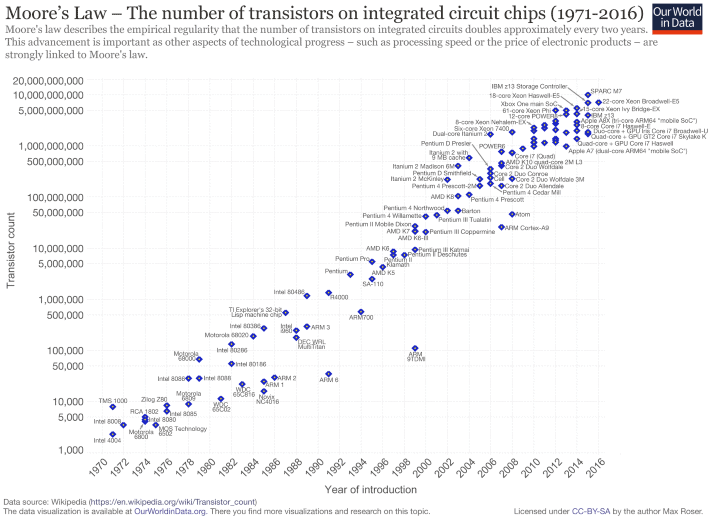

The preceding trends have been understood for half a century—the basis for Moore’s Law. Gordon Moore was a founder of Intel Corporation, one of the world’s leading computer processor and “chip” makers. In 1965, Moore published a paper in which he observed that the number of transistors in computer chips was doubling every two years, and he predicted that this doubling would go on for some years to come. (See this post for data on the astronomical rate of annual transistor production.) Related to Moore’s Law is the price-performance ratio of computers. Loosely stated, a given amount of money will buy twice as much computing power two or three years from now.

The graph above illustrates Moore’s Law and shows the transistor count for many important computer central processing units (CPUs) over the past five decades. (Here’s a link to a high-resolution version of the graph.) Note that the graph’s vertical axis is logarithmic; what appears as a doubling is actually a far larger increase. In the lower-left, the graph includes the CPU from my 1979 Apple II computer, the Motorola/MOS 6502. That CPU chip contained about 3,500 transistors. In the upper right, the graph includes the Intel i7 processor in my new laptop. That CPU contains about 2,000,000,000 transistors—roughly 500,000 times more than my Apple II.

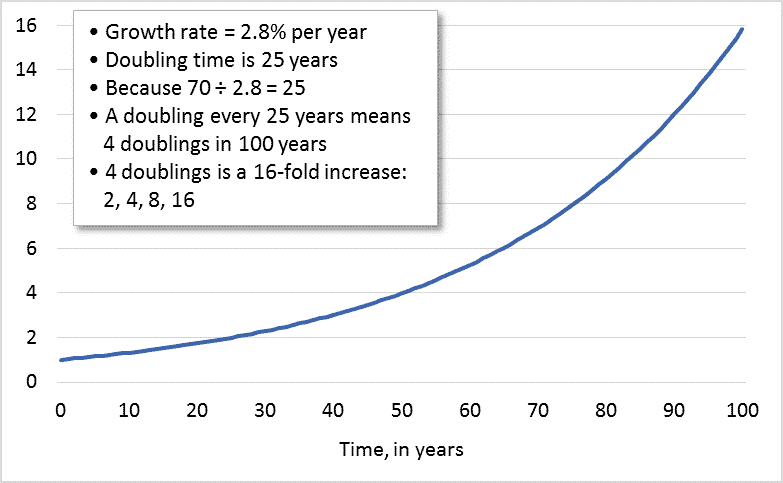

Assuming a doubling every 2 years, in the 39 years between 1979 (my Apple II) and 2018 (My Lenovo) we should have seen 19.5 doublings in the number of transistors—about a 700,000-fold increase. This number is close to the 500,000-fold increase calculated above by comparing the number of transistors in a 6502 chip to the number in an Intel i7 chip. Moreover, computing power has increased even faster than the huge increases in transistor count would indicate. Computer chips cycle faster today, and they also sport sophisticated math co-processors and graphics chips.

In terms of civilization and the future, the key questions include: can these computing-power increases continue? Can the computers of the 2050s be hundreds-of-thousands of times more powerful than those of today? Can we continue making transistors smaller and packing twice as many onto a chip every two years? Can Moore’s Law continue unabated? Probably not. Transistors can only be made so small. The rate of increase in computing power will slow. We won’t see a million-fold increase in the coming 40 years like we saw in the past 40. But does that matter? What if the rate of increase in computing power fell by half—to a doubling every four years instead of every two? That would mean that in 2050 our computers would still be 256 times more powerful than they are now. And in 2054 they would be 512 times more powerful. And in 2058, 1024 times more powerful. What would it mean to our civilization if each of us had access to a thousand times more computing power?

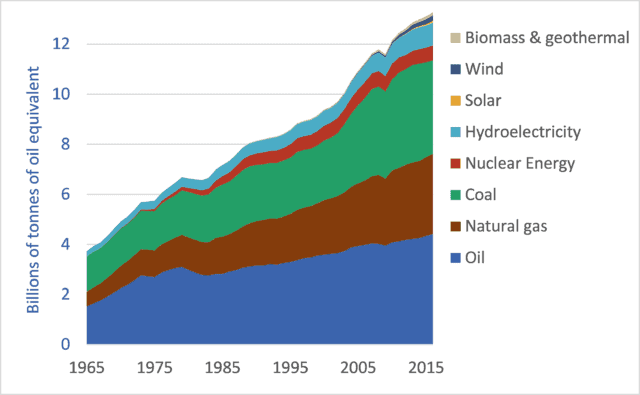

One could easily add a last, pessimistic paragraph—noting the intersection between exponential increases in computing power, on the one hand, and climate change and resource limits, on the other. But for now, let’s leave unresolved the questions raised in the preceding paragraph. What is most important to understand is that technologies such as solar panels and massively powerful computers give us the option to move in a different direction. But we have to choose to make changes. And we have to act. Our technologies are immensely powerful, but our efforts to use those technologies to avert calamity are feeble. Our means are magnificent, but our chosen ends are ruinous. Too often we become distracted by the novelty and power of our tools and fail to hone our skills to use those tools to build livable futures.